What is Haystack?

Haystack is an open source framework for building production-ready LLM applications, retrieval-augmented generative pipelines and state-of-the-art search systems that work intelligently over large document collections. It lets you quickly try out the latest AI models while being flexible and easy to use. Our inspiring community of users and builders has helped shape Haystack into the modular, intuitive, complete framework it is today.

Building with Haystack

Haystack offers comprehensive tooling for developing state-of-the-art AI systems that use LLMs.

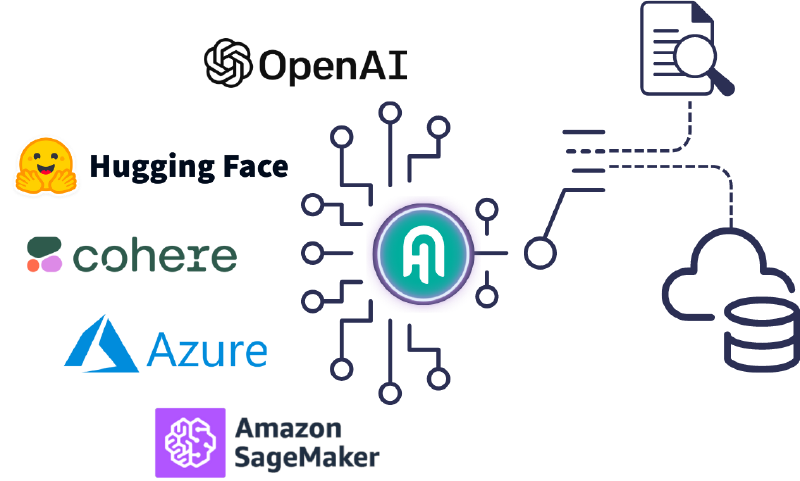

- Use models hosted on platforms like Hugging Face, OpenAI, Cohere, Mistral, and more.

- Use models deployed on SageMaker, Bedrock, Azure…

- Take advantage of our document stores: OpenSearch, Pinecone, Weaviate, QDrant and more.

- Our growing ecosystem of community integrations provide tooling for evaluation, monitoring, data ingestion and every layer of your LLM application.

Some examples of what you can build include:

- Advanced RAG on your own data source, powered by the latest retrieval and generation techniques

- Chatbots and agents powered by cutting-edge generative models like GPT-4, that can even call external functions and services

- Generative multi-modal question answering on a knowledge base containing mixed types of information: images, text, audio, and tables

- Information extraction from documents to populate your database or build a knowledge graph

This is just a small subset of the kinds of systems that can be created in Haystack.

End to end functionality for your LLM project

A successful LLM project requires more than just the language models. As an end-to-end framework, Haystack assists you in building your system every step of the way:

- Seamless inclusion of models from Hugging Face or other providers into your pipeline

- Integrate data sources for retrieval augmentation, from anywhere on the web

- Advanced dynamic templates for LLM prompting via the Jinja2 templating language

- Cleaning and preprocessing functions for various data formats and sources

- Integrates with your preferred document store: keep your GenAI apps up-to-date with Haystack’s indexing pipelines that help you prepare and maintain your data

- Specialized evaluation tools that use different metrics to evaluate the entire system or its individual components

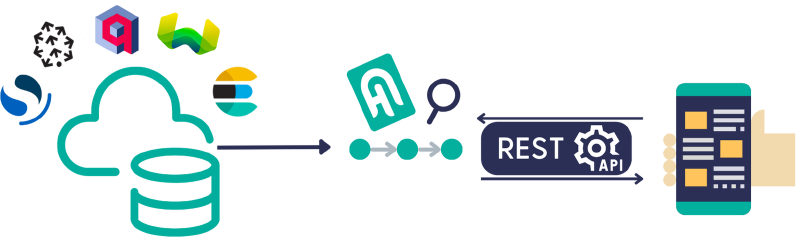

- Hayhooks module to serve Haystack Pipelines through HTTP endpoints

- A customizable logging system that supports structured logging and tracing correlation out of the box.

- Code instrumentation collecting spans and traces in strategic points of the execution path, with support for Open Telemetry and Datadog already in place

But that’s not all: metadata filtering, device management for locally running models, even advanced RAG techniques like Hypothetical Document Embedding (HyDE). Whatever your AI heart desires, you’re likely to find it in Haystack. And if not? We’ll build it together.

Building blocks

Haystack uses two primary concepts to help you build fully functional and customized end-to-end GenAI systems.

Components

At the core of Haystack are its components building blocks that can perform tasks like document retrieval, text generation, or creating embeddings. A single component is already quite powerful. It can manage local language models or communicate with a hosted model through an API.

While Haystack offers a bunch of components you can use out of the box, it also lets you create your own custom components — as easy as writing a Python class. Explore the collection of integrations that includes custom components developed by our partners and community, which you can freely use.

You can connect components together to build pipelines, which are the foundation of LLM application architecture in Haystack.

Pipelines

Pipelines are powerful abstractions that allow you to define the flow of data through your LLM application. They consist of components.

As a developer, you have complete control over how you arrange the components in a pipeline. Pipelines can branch out, join, and also cycle back to another component. You can compose Haystack pipelines that can retry, loop back, and potentially even run continuously as a service.

Pipelines are essentially graphs, or even multigraphs. A single component with multiple outputs can connect to another single component with multiple inputs or to multiple components, thanks to the flexibility of pipelines.

To get you started, Haystack offers many example pipelines for different use cases: indexing, agentic chat, RAG, extractive QA, function calling, web search and more.

Who’s it for?

Haystack is for everyone looking to build AI apps — LLM enthusiasts and newbies alike. You don’t need to understand how the models work under the hood. All you need is some basic knowledge of Python to dive right in.

Our community

At the heart of Haystack is the vibrant open source community that thrives on the diverse backgrounds and skill sets of its members. We value collaboration greatly and encourage our users to shape Haystack actively through GitHub contributions. Our Discord server is a space where community members can connect, seek help, and learn from each other.

We also organize live online and in-person events, webinars, and office hours, which are an opportunity to learn and grow.

💌 Sign up for our monthly email newsletter

🎥 Subscribe to the Haystack YouTube channel

🐘 Follow us on Twitter or Mastodon

📆 Subscribe to our lu.ma calendar to stay informed about events

Enter the Haystack universe

- Start building with cookbooks in Colab notebooks

- Learn interactively via tutorials

- Have a look at the documentation

- Read and contribute to our blog

- Visit our GitHub repo